Charles' Blog

- Psyonix' Epic Fail

- How single-user git-over-ssh works

- Python Project Progression

- Python/Pydantic Pitfalls

- Python has sum types

- Error handling in Rust

- Considered harmful

- Short-form annoyances

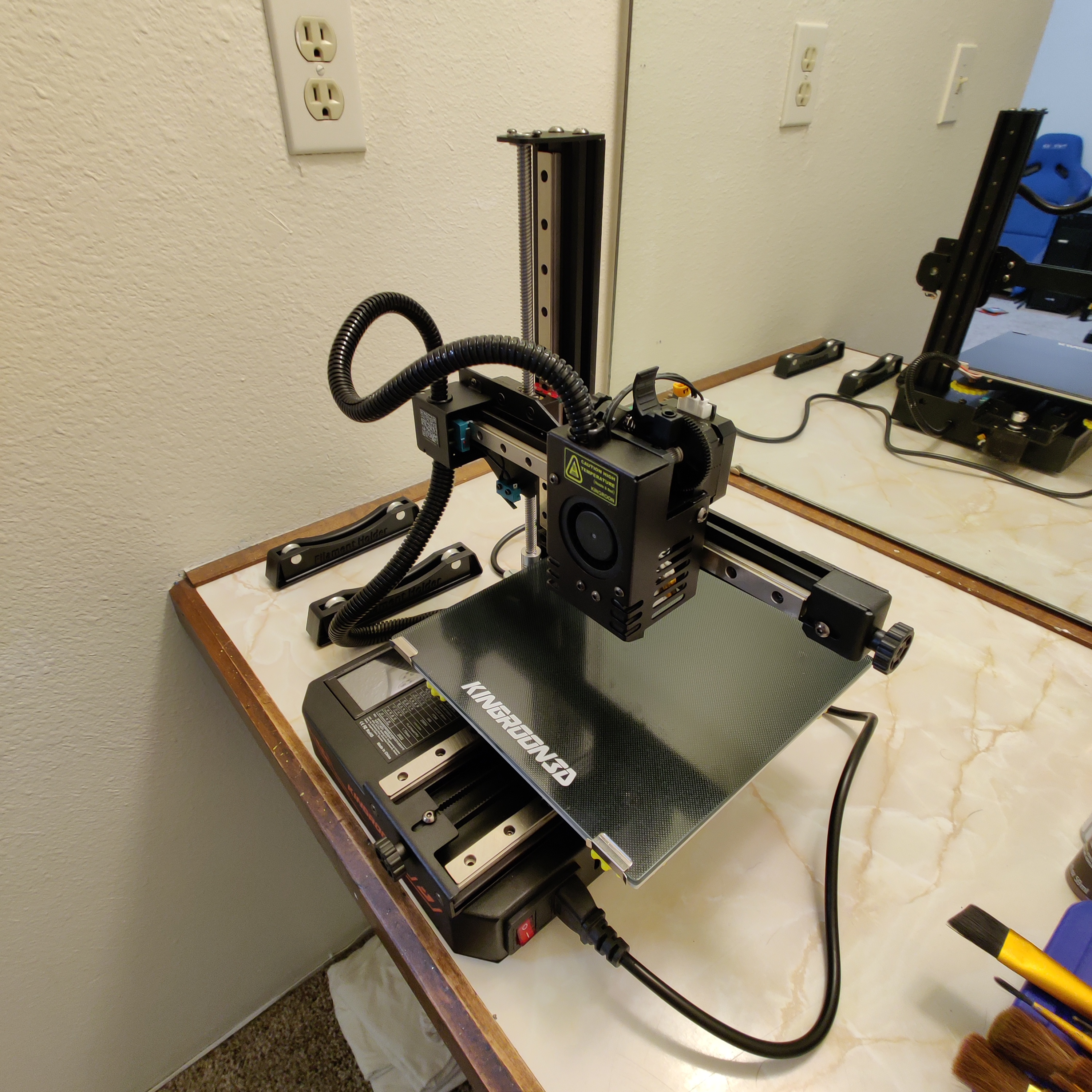

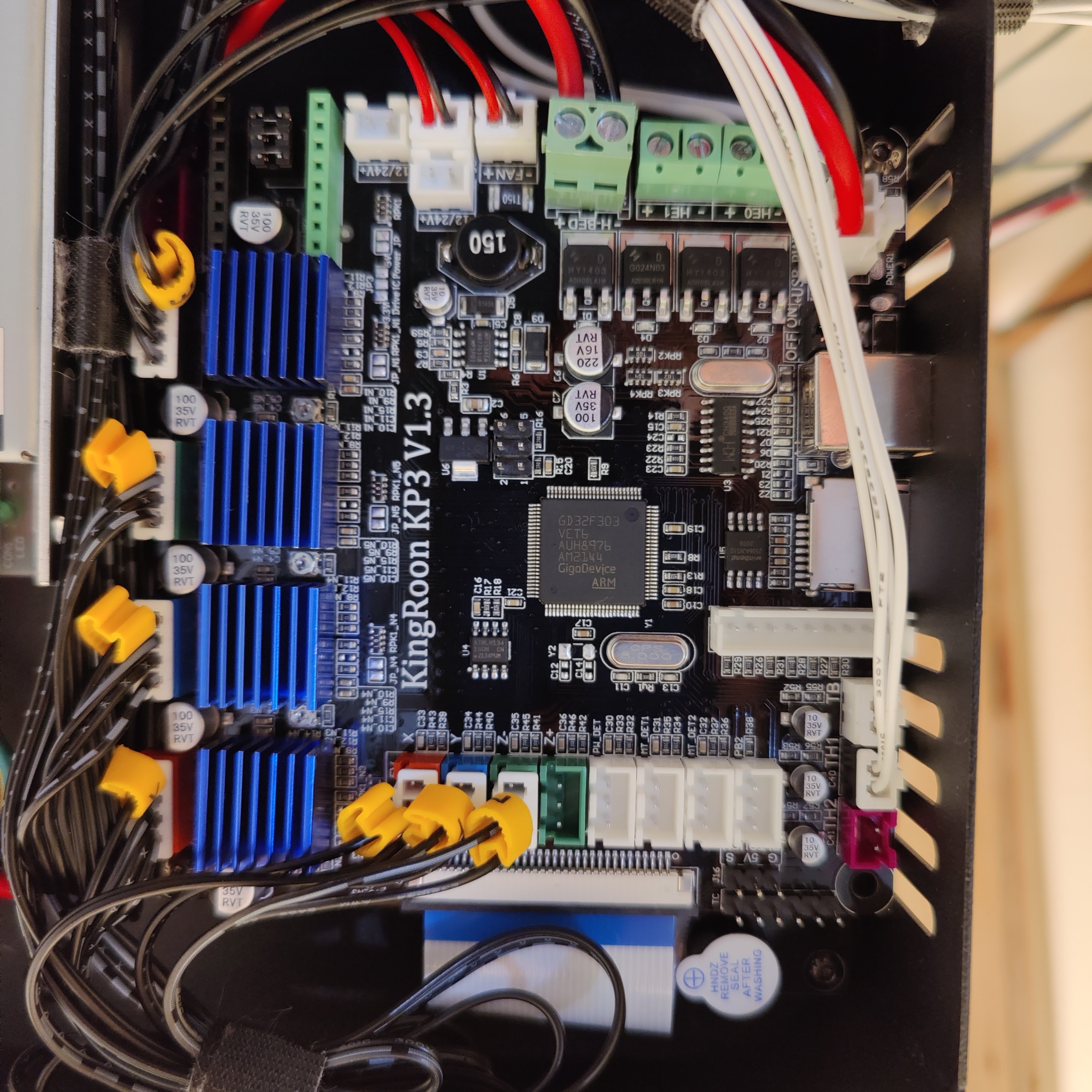

- 3D Printing

Psyonix' Epic Fail

Psyonix, Epic, anticompete, and you!

So Epic Games is buying Psyonix. Everyone's upset for a multitude of reasons, a lot of which aren't the right ones. A few very special people are even content with this news for the wrong reasons, which is even worse. Now, imagine the next three paragraphs as panels in the expanding brain meme.

-

Lots of people are just upset that Rocket League is going to show up on the Epic Games Store (EGS) and aren't reading any further into the issue. This opinion is not so bad because at least they're still doing the right thing, just for the wrong reasons. Also, it seems nearly universally agreed that the EGS is... not very good.

-

A lot of people are indifferent and just want everyone to shut up and move on. Obviously, this accomplishes nothing other than making the people who know what's going on talk more (see: this blog post).

-

Some people are actually supportive of this, their reasoning being that they "can still play it on Steam since they already own it there, and this will just bring the game to a wider audience". The last part may be true (summary: ~40% of EGS users come from consoles rather than being an existing PC/Steam player), but this still misses the actual issue at hand. However, I say "may" because Rocket League's playerbase is already mostly console players.

Very few people are seeing the actual issue at hand, however, which is that by removing the game from Steam, Epic/Psyonix (I don't know which is actually in charge of this decision, but if I had to guess I would pick Epic) would be engaging in extremely anticonsumer and anticompetitive behavior. It's true that neither party has yet to confirm or deny that Rocket League will be removed from Steam after it appears on the EGS.

However, if Epic/Psyonix wanted everyone to calm down, you'd think they'd just announce that Rocket League will remain purchasable from the Steam store and fully supported as it has been, and just also be available on the EGS. There would be no issue if this were the case, as then users would be able to choose whether to play on Steam or on the EGS, which is the ideal situation. The lack of such an announcement says to me that they've already decided internally to remove it from the Steam store and they just don't want to deal with the backlash from the public yet.

Some other issues that have not been addressed is whether current owners of the game on Steam will be able to buy Keys, Rocket Passes, or DLC cars once the game appears on the EGS. Whether Psyonix will continue to support Rocket League on Linux is also up in the air; since the EGS has no Linux version, it seems reasonable (from a business perspective) to me that Psyonix would stop ensuring that updates worked on Linux if Rocket League were made unpurchasable on Steam. Valve's Proton is very good, so that might be an option, but if it comes to that I'd bet a lot of us Linux-using software-vegans probably won't be playing the game anyway.

I'm not going to re-explain why exclusives are harmful here since this has already been done by several others at much higher quality than I could manage. If you're tired of reading, this video does a really good job of laying out the issue in terms of streaming services. This translates really well to game marketplaces since both are just DRM CDNs at their core.

TL;DR: To oversimplify, if you're annoyed with having to pay for multiple streaming services who are all lacking important features, you should also be annoyed with companies like Epic buying exclusive rights to having games on their launchers.

UPDATE: As expected, in late September of 2020, Rocket League was removed from the Steam store. Linux support was also dropped/disabled.

How single-user Git-over-SSH works

I had to read through so much source code to figure this out

Have you ever wondered how GitHub, GitLab, Gogs, Gitea, and so on allow multiple users to push and pull data from repos with only one unix user? Perhaps you want to know how this is done so you can write your own version of the aforementioned type of software that doesn't suck. That's how I got here. Anyway, this is how it's done:

OpenSSH gives you an option (ForceCommand in /etc/ssh/sshd_config) to force

the use of a particular command on connection, overriding the one the client

actually wants to run. When this option is set, the client's "intended command"

gets stored in an environment variable called $SSH_ORIGINAL_COMMAND. This can

be used to force the execution of your own script (or binary) that allows and

disallows "intended commands" each time anyone tries to do anything with this

account over SSH.

Additionally, the ~/.ssh/authorized_keys file allows you to specify

a per-key forced command. This means that you can set an option (say,

--with-key) for the per-key override that isn't present in the global

override in /etc/ssh/sshd_config. Now, in your forced command, you can

disallow write access to connections that are missing the --with-key argument,

since it's only present if a user has uploaded an SSH key.

Even further, you can provide the --with-key argument a key_id value that

allows your software to know exactly which key was used for this connection.

With that information, you can associate the SSH key with a user account and,

for example, allow them extra read/write access to their private repositories

and extra write access to their public ones. It's up to your software to keep

a database of key_id <-> SSH key <-> user associations, however.

While you're messing with the sshd_config, you'll need to add

PermitEmptyPasswords yes and you'll probably also want to add

DisableForwarding yes. Thankfully, you can restrict the effects of those two

options to apply only to your software's user and not to everyone on the server

by using a Match section. All together, your sshd_config will have a new

section like this:

Match User your_software_user

PermitEmptyPasswords yes

DisableForwarding yes

ForceCommand /path/to/your/software

References

man 5 sshd_configfor/etc/ssh/sshd_configoptionsman 8 sshdfor~/.ssh/authorized_keysfile format and options- Both of the above for the uses of the

$SSH_ORIGINAL_COMMANDenvironment variable - The Gogs codebase for reverse-engineering all of the above

Python project progression

Things you may discover as your Python application grows over time

Getting started

So, you have discovered Python. You've singlehandedly written a useful program using only the standard library with relative ease, without the usual fuss associated with learning a new programming language and setting things up. You've found this experience quite enticing.

Managing dependencies

You've decided grow your program, which requires using a few third-party libraries. First, you need to decide how you'd like to manage your dependencies. You'll likely find several ways to do this, each providing some benefit that the previous method lacked, but also accompanied with its own shortcomings, in approximately this order:

- Use your system's package manager

- pro: doesn't require any extra software to manage dependencies

- con: unless you or anyone running your code uses Windows

- con: you're (typically) at the mercy of the singular version of a given library provided by your package manager (i.e. you do not get to choose the version)

- Manually install things with

pip- pro: straightforward

- pro: you can choose the version of each direct dependency

- con: imperative package management isn't great for repeatability or documentative purposes

- Maintain a

requirements.txtfile containing your direct dependencies and usepip install -r requirements.txtto install your dependencies- pro: declarative package management

- con: no control over transitive dependency versions between

pip installs

- Maintain a

requirements.infile containing your direct dependencies, usepip-compilefrompip-toolsgenerate a lockedrequirements.txtfile, and then usepip install -r requirements.txtto install your dependencies- pro: transitive dependency version pinning

- con: no way to separate dependencies only used for tests/examples versus those required for library publication or application deployment

- Do the above twice, once for runtime requirements and a second (with different

filenames, probably like

requirements{,-dev}.{in,txt}) files- pro: seperation of runtime and test/example dependencies

- con: litters the root of your project with four files instead of just two

- Use

poetry- pro: you're back to only needing two files

- con: it isn't exactly standard tooling

- con: it tries to do a lot more than just manage dependencies

Managing dependencies part two

There's a big con of all of the above options (except poetry) that I neglected

to mention: the act of installing packages is a global operation (by default),

and could potentially break other Python software you're using or developing if

the stars don't align (which they won't). Luckily, there are solutions to this

problem; unluckily, there are a lot of them:

pyenv- manages versions of CPython per project

pyenv-virtualenv- an extension for the above

- isolate things installed via

pipper project

pyenv-virtualenvwrapper- extension for the above

- adds some extra commands

virtualenv- isolate things installed via

pipper project

- isolate things installed via

virtualenvwrapper- extension for the above

- adds some extra commands

pyvenv- isolate things installed via

pipper project

- isolate things installed via

pipenv- isolate things installed via

pipper project - manages a lockfile

- isolate things installed via

venv- isolate things installed via

pipper project - this one was made specifically to be the canonical tool for this purpose

- isolate things installed via

poetry- isolate dependencies per project

- Nix +

nix-direnv- completely unrelated to the Python ecosystem but can do what all of these tools do except with a few more very useful features

- Bonus round:

conda,anaconda,miniconda,mamba,micromamba

Understanding dependencies

Now that you've settled on a combination of tools, it's time to go on the hunt for helpful dependencies for your program. Over time you'll notice that a good fraction of projects present their documentation in different ways. It can be difficult going from one high profile project to another because not only do you need to relearn the API surface, but also how to navigate its documentation in the first place. Speaking of API surface, a lot of documentation is actually written as if it were a guide rather than an API reference, so determining the permissible uses of any given item is much harder and often ambiguous unless you read the source code of the project, or that of projects using the library in question.

Using dependencies

It's now time to actually add the dependencies you've deemed useful and worthy and start writing code using them. Uh oh, version resolution is failing? Looks like two of your direct dependencies require incompatible versions of a transitive dependency. Luckily, this is solved trivially: just kidding, it isn't.

Making your code nice (visually)

After getting out of that mess somehow, your codebase has grown in size significantly and you think it's probably time to start using an auto-formatter and a linter, especially since you'd like to bring in some extra help. For auto-formatters, you have the following choices:

autopep8blackyapf- IDE-specific tooling (good luck running this in your CI pipeline)

You notice something peculiar: the tool you've chosen doesn't automatically sort

your import statements at all. Indeed, you need a separate tool for this. Here

are some options:

- lexicographically sort them like some kind of madman

- Use

isortlike the CIA probably does - IDE-specific tooling (good luck running this in your CI pipeline)

Make your code nice (cognitively)

Even though it looks nice, you still feel like there are some questionable lines of code here and there. A good number of these oddities can be automatically detected and complained about by a linter, which will help you clean things up a bit more. Again, you have a number of options:

autoflakebanditflake8flakehellprospectorpycodestylepydocstyle- This one actually lints your docstrings rather than your code, which is useful

pyflakespylsppylint- I've saved this one for last because it appears to be a superset of some

of the previous linters (at least it is for

flake8), and it seems to be the most commonly used

- I've saved this one for last because it appears to be a superset of some

of the previous linters (at least it is for

Configuring stuff

Now that you've chosen all of your tooling, you should probably configure them to your liking. Or at the very least, configure them to work with each other, as lot of them disagree by default. The rapidly growing list of configuration files is worrying you, so you decide to see if there's some alternative. To your delight, you discover PEP 518, which exists for this exact purpose. To your dismay, however, you come to learn that half of your chosen tools don't actually support this.

Gaining momentum

There are now multiple people working on your codebase, perhaps because you've started a company around this program of yours. The number of lines of code increased rapidly and you no longer have had eyes on all parts of the project. Perhaps lints stopped failing your CI pipelines in the name of feature velocity. You find yourself wishing more lints were capable of failing builds, that you and others had left more docstrings behind, or at least that you knew anything about the return value of the function you're trying to call. It would be cool if you could generate some sort of easily-navigable API reference to get a summary of how the new code works together, too. You find a few tools, only to discover that some of them are painful to set up on an existing project and that some of them refuse to document items considered "private" entirely. This is unfortunate considering you're writing an application and not a library, meaning basically all of your code is considered private.

Static typing

At the very least, you can start using yet more linters locally. More specifically, static analysis tooling. Again, you have a number of options:

mypypytypepyrepyright

These tools will help you figure out what's going on in this new code you haven't seen before. It gives you a map to get out of the woods you've been feeling lost in. It also helps to prevent a few kinds of duck-typing related bugs caused by incorrect assumptions about an object's properties. At this point, though, the code is so fargone that allowing one of these tools to fail CI is implausible due to the sheer amount of changes required to get CI to start passing again. And if the tools can't fail CI, you can't guarantee that other people will follow the conventions set by these new tools, which can significantly hinder their utility.

Statically typing exceptions

You've now noticed that PEP 484 provides no method to annotate exception types, nor is any existing static analysis tool capable of determining the list of exception types a given function may raise. You may be told to "just read the docs", to which you point out that there are often not docs, and sometimes they lie. Or maybe they said "just read the source code", which you know is ridiculous because that would mean you'd have to read the entire source tree of every function you'd like to know about. Or maybe "you should only handle exception types you know you can handle", to which you ask "how can I decide whether I can handle an exception if I don't know what the possible exceptions are?" and are left without an answer like the OP of this SO post.

Memory model

Suddenly you realize that you're not sure whether you're supposed to be able to mutate the original memory of the object being passed to the function you're currently bugfixing. You wonder whether it's possible to annotate or verify the intended ownership semantics of the object and are disappointed to learn that you (currently?) cannot. Perhaps you also wonder if a system like this could improve synchronization primitive ergonomics by forcing you to acquire a lock before granting you read or write access to the underlying data. You look for prior art on this topic and discover it has indeed been done before. Neat.

Performance

The performance of your application is showing signs of becoming a problem, so

naturally you seek to introduce some form of parallelism (presumably you've

already been using async/await for IO-bound concurrency). The obvious answer

is multithreading, so you dispatch parallelizable work to a threadpool to be

executed, adding locks throughout your code as necessary. Since lock

acquisition/release isn't enforced particularly strongly as you learned earlier,

perhaps you hit some deadlocks or data races or other parallelism-related bugs

along the way.

Looking at your CPU usage, you notice that the program is still only using a single core at a time for some reason. It turns out that this is because threads aren't real (in CPython at least). A popular way to solve this problem is mutliprocessing via some IPC mechanism like UDS or TCP or HTTP or AMQP or something. You pick one of these and successfully further your goal of scaling vertically, but deep down, you're keenly aware of the overhead of IPC versus shared memory and locks and it leaves a bad taste in your mouth.

During your research about parallelism in Python, you discover some discourse on the topic of removing the GIL, but it seems like the consensus is that this is a bad idea because, somewhat ironically, it would actually hinder performance quite significantly. The reason for this is that the GIL acts as a single lock around the CPython interpreter's internals, and a single lock is very fast and has low contention. If you remove this lock (and still desire programs to run correctly), you'll need to add a bunch of smaller locks around all the objects. Even though there tends to be low contention on all these objects, Python's refcounting memory model now requires atomics, which absolutely annihilates CPU cache as new objects are created and destroyed all the time.

If only there were an alternative memory management scheme to alleviate this problem, like a different flavor of garbage collector or perhaps some way to statically track object lifetimes. But at that point, it probably wouldn't be Python anymore.

Further reading

- Drew Devault's Python: Please stop screwing over Linux distros

Python/Pydantic Pitfalls

Someone please throw me a rope

I'm going to be focusing on pydantic in this post, since that's what I know

best, but reading through this discussion and having

glanced at some of the other serialization frameworks, they seem to have similar

problems or otherwise look awful to use. If you're trying to use FastAPI, you're

locked into pydantic anyway too. We'll be trying to interact with the

following JSON schema, and let's say we need to support PersonId (just the

ID), Person (rest of the data without the ID), and PersonWithId (data plus

ID, shown here) in the code for dictionary reasons:

{

"title": "PersonWithId",

"type": "object",

"properties": {

"firstName": {

"title": "Firstname",

"type": "string"

},

"lastName": {

"title": "Lastname",

"type": "string"

},

"age": {

"title": "Age",

"type": "integer"

},

"id": {

"title": "Id",

"type": "string",

"format": "uuid"

}

},

"required": [

"firstName",

"age",

"id"

]

}

This seems pretty straightforward, so let's try defining some models:

class PersonId(BaseModel):

# We have to do this because `id` is taken in the global namespace

which: UUID = Field(..., alias="id")

class Person(BaseModel):

first_name: str = Field(..., alias="firstName")

last_name: Optional[str] = Field(..., alias="lastName")

age: int = Field(...)

class PersonWithId(PersonId, Person):

pass

Cool, now some code to construct a value and convert it to JSON:

person_id = PersonId(which=uuid4())

person = Person(first_name="Charles", age=23)

person_with_id = PersonWithId(**person.dict(), **person_id.dict())

print(person_with_id.json())

Time to show the programming world what language is boss:

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "/home/charles/science/python/pydantic-sucks/pydantic_sucks/main.py", line 28, in main

person_id = PersonId(which=uuid4())

File "pydantic/main.py", line 331, in pydantic.main.BaseModel.__init__

pydantic.error_wrappers.ValidationError: 1 validation error for PersonId

id

field required (type=value_error.missing)

Oh, nevermind, I guess. That's weird though, I set which=uuid4() like right

there, what do you mean it's not present? Apparently,

you must explicitly tell pydantic that you'd like to be able to populate

fields by their name. What!? Let's spam Config classes everywhere to fix it:

class PersonId(BaseModel):

which: UUID = Field(..., alias="id")

+ class Config:

+ allow_population_by_field_name = True

+

class Person(BaseModel):

first_name: str = Field(..., alias="firstName")

last_name: Optional[str] = Field(..., alias="lastName")

age: int = Field(...)

+ class Config:

+ allow_population_by_field_name = True

+

class PersonWithId(PersonId, Person):

pass

Let's try running the code again:

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "/home/charles/science/python/pydantic-sucks/pydantic_sucks/main.py", line 29, in main

person = Person(first_name="Charles", age=23)

File "pydantic/main.py", line 331, in pydantic.main.BaseModel.__init__

pydantic.error_wrappers.ValidationError: 1 validation error for Person

lastName

field required (type=value_error.missing)

Great. Why is this happening? I specified the type for last_name to be

Optional[T] and the docs say it's aware of that type.

Scouring the docs for a while, we learn that

pydantic actually distinguishes between "missing field" and "field is set to

None/null" for some reason. Whatever, let's fix it:

class Person(BaseModel):

first_name: str = Field(..., alias="firstName")

- last_name: Optional[str] = Field(..., alias="lastName")

+ last_name: Optional[str] = Field(None, alias="lastName")

age: int = Field(...)

And run our code again:

{

"first_name": "Charles",

"last_name": null,

"age": 23,

"which": "f49be13a-63f1-4ef6-b8f7-b948a32836ed"

}

Hooray, no more errors! Except wait, this doesn't look like our JSON schema at all! Why are they being serialized by their field names instead of the aliases? Y'know, the aliases I added for the express purpose of appearing in the JSON because the JSON field names are (mostly) (stylistically and sometimes semantically) invalid Python identifiers? The docs say you have to explicitly ask for them to be serialized by their aliases. Why though? If this isn't the default, what are the aliases even for? Whatever, let's try it:

-print(person_with_id.json())

+print(person_with_id.json(by_alias=True))

And the output:

{

"firstName": "Charles",

"lastName": null,

"age": 23,

"id": "41501697-8ed7-4d2c-8bd0-47aea0d5cd92"

}

We've finally done it. I should be excited to be getting this working but at

this point I'm actually just exhausted. Plus I've still got some questions.

There are still two calls to some_model.dict() that don't take

by_alias=True, but the code appears to work anyway. How am I supposed to

remember when it's required to add by_alias=True and when it would break

things if I did add it? I think this issue is on Python itself, for baking the

concept of a constructor into the language and for allowing everything to be

represented as dicts. (More on that later). Also, what does pyright think

about our code now? Let's find out:

error: No parameter named "which" (reportGeneralTypeIssues)

error: Argument missing for parameter "id" (reportGeneralTypeIssues)

error: No parameter named "first_name" (reportGeneralTypeIssues)

error: Arguments missing for parameters "firstName", "lastName" (reportGeneralTypeIssues)

A little digging suggests that pyright is now expecting you to initialize the

fields based on their aliases instead of their actual names. Again, this seems

extremely backwards. How can I fix this without using (stylistically or

semantically) illegal identifiers? This discussion and

this comment specifically suggest that pyright is

thinking too hard about what identifier a field name should be populated by. To

be fair to it, it's very weird for a typechecker to have a constraint that

a field is initialized by exactly one of two names in a generalized fashion. Oh

was it unclear that you can still populate the fields by both names? Because

yeah, you can do that. So anyway how do I appease pyright? It seems like the

only way to do so is to add # type: ignore everywhere. Remember, doing # pylint: disable=invalid-name is not an option because of JSON field names that

are semantically invalid Python names, such as id or anything using hyphens

for word separators. So, let's clutter the place with sad comments:

-person_id = PersonId(which=uuid4())

-person = Person(first_name="Charles", age=23)

+person_id = PersonId(which=uuid4()) # type: ignore

+person = Person(first_name="Charles", age=23) # type: ignore

Now pyright, pylint, and the Python interpreter (in the case of semantically

illegal names) are all satisfied and don't give us any issues. But at what cost?

This code now typechecks perfectly fine but will detonate at runtime:

-person = Person(first_name="Charles", age=23) # type: ignore

+person = Person(first_name="Charles", age="lol") # type: ignore

And this code typechecks fine and, somewhat surprisingly, works at runtime too. This is not very Parse, Don't Validate and thus is almost certainly prone to causing problems in the future:

-person = Person(first_name="Charles", age=23) # type: ignore

+person = Person(first_name="Charles", age="23") # type: ignore

This code typechecks and runs fine, but omits the extraneous value in the output that we potentially wanted to show up there:

-person = Person(first_name="Charles", age=23) # type: ignore

+person = Person(first_name="Charles", age=23, fingers=10) # type: ignore

We can at least configure pydantic to give us errors about that situation so

that a test suite, assuming there is one and assuming it has good coverage, can

catch this before we deploy anything by adding extra = Extra.forbid to all of

your Config interior classes (or whatever they're called), which results in

errors like this:

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "/home/charles/science/python/pydantic-sucks/pydantic_sucks/main.py", line 31, in main

person = Person(first_name="Charles", age=23, fingers=10) # type: ignore

File "pydantic/main.py", line 331, in pydantic.main.BaseModel.__init__

pydantic.error_wrappers.ValidationError: 1 validation error for Person

fingers

extra fields not permitted (type=value_error.extra)

Speaking of errors, what happens if our code fails to serialize or deserialize? Maybe our code constructed a value that didn't pass a validator or is the wrong type or the incoming data was malformed or some other such problem. In that case, we have to deal with the pitfalls of Python's error handling facilities, which I've talked more about in a previous post.

Speaking of deserialization, what about doing that with pydantic? You get at

least all the same problems as above, and at this point I don't really want to

talk about pydantic anymore. Except to make the point again that way aliases

work in pydantic (and similar libraries) is extremely backwards and the

default behavior is really bad; I hope at this point I've demonstrated clearly

why this is the case, but it's worth repeating just to be sure.

Let's talk about something else

What if we wanted to do this in Rust? Let's define our models:

#![allow(unused)] fn main() { #[derive(Serialize, Deserialize)] struct PersonId(Uuid); #[derive(Serialize, Deserialize)] struct Person { #[serde(rename = "firstName")] first_name: String, #[serde(rename = "lastName")] last_name: Option<String>, age: u8, } #[derive(Serialize, Deserialize)] struct PersonWithId { id: PersonId, #[serde(flatten)] person: Person, } }

And some code to construct a value and serialize it:

#![allow(unused)] fn main() { let id = PersonId(Uuid::new_v4()); let person = Person { first_name: "Charles".to_owned(), last_name: None, age: 23, }; let person_with_id = PersonWithId { id, person, }; let json = serde_json::to_string(&person_with_id)?; println!("{json}"); }

Now let's see what happens when we run it:

{

"id": "ecacbd24-2c68-45a0-aaf0-ebee4390c16a",

"firstName": "Charles",

"lastName": null,

"age": 23

}

Why did — oh this isn't Python; it actually worked first try. Sorry, force of habit. Anyway, why does this work, and why is it so much safer and more intuitive?

-

#[serde(rename = "whatever")]does what you think it would, unlikepydantic'salias, and no extra configuration is required beyond setting the new name we want. (serdealso allows setting different names for serialization and deserialization, which can be handy when, for example, you want to use the same model to deserialize from your database and serialize for your web API) -

There are no weird typechecking issues because there are no constructors getting modified at runtime to accept multiple names for the same things

-

PersonWithIddoesn't require a roundtrip of its components through aHashMap(akadict) and because of that and point 1, there's no fear of whether you should have addedby_alias=True -

It also means any inability to map fields correctly will cause a compile time error instead of a runtime one

-

Any attempt to add a new field in the

let person = Person { ... };section will cause a compile error because the struct does not yet define that new field. You can acheive the same for deserialization by annotating the container with#[serde(deny_unknown_fields)] -

Any places where a runtime error can occur is made clear by the

Resulttype, in this case we're simply handling it with the?operator -

There's no weird coercion happening, trying to set

age: "23"without explicitly converting it from&strtou8viaparse()or such would fail to compile instead of potentially accepting bad values, which is much more in the Parse, Don't Validate spirit

Conclusion

I don't really know how to end this article without either saying something overly sassy about Python or holding my head in my hands in sadness. I hope Python's situation improves, or maybe there's a better library out there that solves all of these problems somehow (you'd still have to deal with constructors) that I just haven't seen yet. In the meantime, I'm going to continue using Rust when I can and Python only when I have to. I guess I went the sadness route.

Addendum

For FastAPI in particular, it looks like it sets by_alias=True by default for

returning responses built from pydantic objects. This is notably not the same

as pydantic's default, which is yet another violation of the Principle of

Least Astonishment in the Python ecosystem. I also see this

merged PR suggesting that you cannot disable this behavior

without either causing inconsistencies in the schema presented in the generated

OpenAPI spec or requiring you to implement a dummy model for the express purpose

of showing up in the OpenAPI spec with the correct field names, which is another

POLA violation and seems awful to maintain.

A large cause of problems with complex pydantic code is the following pattern:

# NewThing inherits from BaseModel and OldThing, and adds its own extra fields

new_thing = NewThing(**old_thing.dict(), **other_thing.dict())

# Or

new_thing = NewThing(**old_thing.dict(), **{"new_field": new_value})

# Or

new_thing = NewThing(**old_thing.dict(), new_field=new_value)

Unlike Rust, Python has an explicit concept of constructors and also supports

inheritance. These two features prove themeselves to be problems here, because

their existence tricks people into using them. pydantic makes this mistake by

requiring object composition to be facilitated through the use of inheritance.

Python provides them no choice but to use constructors to construct these

values. The result is that, in any of the above examples, it is impossible to

statically verify that every field of NewThing gets set properly by its

constructor's arguments, and are all suspect for causing runtime errors.

This could be fixed by listing out every individual field of NewThing,

including those inherited from OldThing, and explicitly matching up every one

of those fields with the new value from the example constructors' arguments. The

massive downside is the maintenance burden: now you have to keep every instance

of this type of conversion in sync, which is fallible since accidentally

omitting Optional[T] fields will run and typecheck fine even though they

should have been set to some value from other_thing, for example. Rust and

serde solve all of these issues by simply storing all of old_thing inside

NewThing via #[serde(flatten)], alongside other_thing or any other new

fields NewThing needs to have, as demonstrated above.

Python and some libraries (like pydantic) treat names with

leading underscores specially, which may have adverse effects on the

serializability of fields with certain names. I know Go also uses a similar

method (casing) to convey privacy information, and I wonder if Go has similar

issues at the intersection of those and serialization. Again, Rust/serde does

not exhibit this issue because naming has no real effect (single leading

underscore can silence unused-code warnings but that's it) and #[serde(rename = "whatever")] is painless and straightforward to use.

FAQ

-

Q: I haven't heard of anyone else having this problem, are you just bad at programming?

A: You know, I haven't either. Maybe I am. *shrug*

-

Q: Why do

serde-like frameworks combine the model definition and its configuration in the first place?A: Because if they didn't, you'd wind up with a worse version of the problem with

by_alias=True. It makes it possible to try to serialize something with the wrong configuration, instead of simply baking it in so it happens every time transparently to you. Maybe it wouldn't be so bad if all you were doing was changing field names, but there's a lot of stuff you can do withserdecontainer and field attributes,pydanticvalidators, and manually implementingserdetraits for complicated things. Inserde's case, you'd also take a performance hit because now it would have to check a separate source for what to do with a model for every single model in the tree, instead of what it currently does, which is generate the correctly-configured code at compile time.

Python has sum types

I am absolutely thrilled about this discovery.

Python doesn't have sum types

One of my biggest gripes with Python is that it doesn't have sum types, or a way to emulate them. For example:

>>> from typing import List

>>> x = []

>>> isinstance(x, List[int])

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/nix/store/nvxp3xmlrxj9sw66dk7l0grz9m4889jn-python3-3.9.12/lib/python3.9/typing.py", line 720, in __instancecheck__

return self.__subclasscheck__(type(obj))

File "/nix/store/nvxp3xmlrxj9sw66dk7l0grz9m4889jn-python3-3.9.12/lib/python3.9/typing.py", line 723, in __subclasscheck__

raise TypeError("Subscripted generics cannot be used with"

TypeError: Subscripted generics cannot be used with class and instance checks

This means that Python has no way to check if a variable is an instance of a specific type1...

Unless it does

I present to you: typing.Literal. "How is that relevant?" you ask. Excellent

question. First, let's remember that sum types are also known as "tagged

unions". Python has unions in the form of typing.Union (or the | syntax

in newer versions). Given this, we can create the union half of a sum type

like this:

from typing import Union, List

StrOrIntList = Union[str, List[int]]

# ^^^ ^^^^^^^^^ A **variant**

# |

# Another **variant**

The next problem is figuring out how to detect which variant we have. The

obvious strategy is to use isinstance, but as established, isinstance is not

flexible enough. I also looked around to see if there's a way to get type

annotation information at runtime so one could check against that, but this

doesn't seem to be possible. Even if you could, it would also not cover the case

where you want multiple variants with the same data type: Union[int, int, str] is the same as Union[int, str] to the typechecker2, so there's no way

to tell the two ints apart.

Next, we need some mechanism to associate each variant with a tag

that's accessible at both runtime and typecheck-time, so that we can do control

flow at runtime and allow the typechecker to assert that we're checking the

right things before even running the code. For the association portion, we

know that types can be paired together in Python by using typing.Tuple like

this:

from typing import Tuple, List

StrAndInt = Tuple[str, List[int]]

# ^^^ ^^^^^^^^^ We'd like this to be the **variant's** data

# |

# We'd like to use this as our **tag**

This has a major problem, which is that the typechecker has no insight into the

possible values of our tag, the str. This practically defeats the entire

purpose, since it robs us of assertions that we're checking against valid

tags, and that we're checking against all valid tags. After all, the

goal is to ensure more things can be checked before runtime, and having to run

the code to make sure you have no typos in strings where you're checking against

a tag is pretty self-defeating. For a week or so after coming up with what

I've discussed so far, I had no solution to this problem, and I thought most

hope was lost. But then I had a realization, which led directly to this blog

post.

For some reason3, Python allows you to use value literals as types. Importantly, value literals can be used not only as a type, but also as a value. Using this slightly odd behavior4, we can create a tag that's accessible at both runtime and at typecheck-time. For example:

from typing import List, Tuple, Literal

NamedInt = Tuple[Literal["a tag"], List[int]]

# ^^^^^^^^^^^^^^^^ A statically-analyzable

# *and* runtime-accessible **tag**!

On its own, this construct is completely and utterly useless. But, if we combine our tags with a union, we get...

Tagged unions

Which are also known as...

Sum types

In Python, sum types are constructed as a union of tuples of a tag and the variant's data. Here's an example:

from typing import List, Tuple, Literal, Union

MySumType = Union[

# ^^^^^ The union

Tuple[Literal["string"], str],

Tuple[Literal["list of ints"], List[int]],

# ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ A variant

# ^^^^^^^^^^^^^^^^^^^^^^^ ^^^^^^^^^ The variant's data

# |

# The variant's tag

]

Now that we have our sum type, I'll demonstrate how it's used. The type of

x[1] where x is of type MySumType can be either str or List[int]. We

can determine the type of x[1] we have by looking at the tag, which is x[0].

Then, if we do things right, our typechecker will be able to automatically

narrow the type of x[1] to either str or List[int] based on the type and

value of x[0] in our control flow. Let's give it a try:

def gives_sum_type() -> MySumType:

# Just return one of the two "variants"

return ("list of ints", [1, 2, 2, 3])

# We could also do this, for example:

# return ("string", "foo")

def uses_sum_type():

match gives_sum_type():

case ("string", x):

print("the variant contains a string:", x)

case ("list of ints", x):

print("the variant contains a list of ints:", x)

The typechecker narrows the type of x in each branch to either str or

List[int] based on the tag matched in the case arm. Values in the tag

position that do not correspond to any tag in the sum type's definition will

cause the typechecker to emit an error; typos and the removal of variants from

the sum type's definition are both covered by this case. Exhaustiveness is also

checked in match statements, so if you later add variants to MySumType, the

typechecker will emit an error since not all variants are covered.

If you're not on Python 3.10 or later (which was when match was introduced),

then you can use the following hack to get the same guarantees:

from typing import NoReturn

def unreachable(_: NoReturn) -> NoReturn:

raise AssertionError("unreachable")

def uses_sum_type():

x = gives_sum_type(False)

if x[0] == "string":

y = x[1]

print("the variant contains a string:", y)

elif x[0] == "list of ints":

y = x[1]

print("the variant contains an list of ints:", y)

else:

unreachable(x)

Avoiding a typesafety footgun

Given the following:

from typing import List, Tuple, Literal, Union

MySumType = Union[

Tuple[Literal["string"], str],

Tuple[Literal["list of ints"], List[int]],

]

MyOtherSumType = Union[

Tuple[Literal["string"], str],

Tuple[Literal["list of strings"], List[str]],

]

The code ("string", "foo") will pass the typechecker as either MySumType

or MyOtherSumType because they both have a variant with the same name, which

is not great. One way to get around this would be to create a wrapper class and

instantiate your sum type inside its constructor. For example:

from typing import List, Tuple, Literal, Union

class MySumType:

def __init__(

self,

adt: Union[

Tuple[Literal["string"], str],

Tuple[Literal["list of ints"], List[int]],

],

) -> None:

self.adt = adt

Now you'd write MySumType(("string", "foo")) instead, which will never

typecheck as another sum type that happens to have variants with the same name.

In order to use match with this type, you'd simply use match.adt to get

access to the inner type. This has the added bonus that you can now add class

methods to sum types, which is pretty cool.

Adding documentation

You might also want to add documentation to your sum type variants. We can

accomplish this by moving away from Literal["..."]s and creating new types:

from typing import List, Tuple, Literal, Union

class String:

"""

A variant containing a string

"""

class ListOfInts:

"""

A variant containing a list of ints

"""

class MySumType:

def __init__(

self,

adt: Union[

Tuple[String, str],

Tuple[ListOfInts, List[int]],

],

) -> None:

self.adt = adt

This is slightly more awkward to write, as MySumType((String(), "foo")), but

the benefit of documentation outweighs it in my opinion. Matching still works as

expected as well.

Danger zone

One famously successful use-case for sum types is error handling: their exhaustive and explicit properties make it easy to determine which failures are possible, and from there, which failures you can handle, and then to handle those in a type-safe, high-confidence manner. We can now accomplish this in Python, by replacing the use of exceptions with the following foundation5:

from typing import TypeVar, Union, Tuple, Literal, Generic

class Ok:

"""

A variant containing a success value

"""

class Err:

"""

A variant containing an error value

"""

T = TypeVar("T")

E = TypeVar("E")

class Result(Generic[T, E]):

def __init__(

self,

adt: Union[

Tuple[Ok, T],

Tuple[Err, E],

],

) -> None:

self.adt = adt

# More methods...

This is what scientists call "carcinisation", which is the phenomenon wherein, given a programming language and enough time, it will eventually become Rust.

If the types have generic parameters. Obviously, this works for "regular"

types, because otherwise isinstance() would be completely useless.

Even further, Union[T, T, T] is the same as T; note how the Union is

dropped entirely.

I am indifferent to the rationale that caused this behavior to exist, but I am very happy that it does because it is incidentally core to making proper sum types in Python.

Hmm, maybe it's not that odd, I guess you could use it to do const generics? I bet that's why it exists.

I would also like to have an ABC for sum types that provide a single

adt() method that performs a conversion like Result -> ResultRaw so that

this sort of interface can be identical for all sum types, which would make

writing manual match statements more consistent. I can't think of an easy

way to statically type such an ABC right now though, and the .adt convention

is good enough for my immediate purposes.

Error handling in Rust

Some error handling strategies are more equal than others.

Type-erased errors

Box<dyn std::error::Error>1 certainly has its uses. It's very convenient if

the API consumer2 genuinely does not care what an error was, only that

there was an error. If the reactive action the API consumer needs to perform

when an error occurs is exactly the same regardless of what the error actually

was, then Box<dyn Error> is perfectly fine. It's easy to reach for Box<dyn Error> because getting ? to work on all error types in your function for free

is very attractive from a convenience standpoint. However, once you need to do

something specific when a specific error occurs, you should no longer be using

Box<dyn Error>.

In order to handle errors inside Box<dyn Error>, you must know the exact type

signature of the error you intend to handle. With generic code, this can

sometimes be difficult, especially if you're new to Rust. The compiler can

provide almost no useful diagnostics about whether you're trying to downcast to

the right type. This also means you have to keep track of which concrete errors

are inside the Box<dyn Error> yourself so that you don't accidentally try to

handle an error that will never occur there. The loss of (potential for)

exhaustive error handling makes it difficult to have confidence in a program's

robustness because Box<dyn Error> does not encode descriptions of a function's

failure modes in the type system, which makes it extremely easy to overlook

easily-handled errors, instead turning them into fatal problems for program

functionality.

Something else that came to my attention was the question of what to do in the

situation where you're writing a library whose errors are caused by errors

defined in your dependencies/crates you don't control. One school of thought is

to never expose the concrete type of your dependency's error type, instead

preferring to return an enum variant containing simply Box<dyn Error>. If your

users care about the specifics of that downstream error, they can add your

dependency to their dependencies, then downcast to the concrete type in the code

using your library. This way, if you ever make a semver-incompatible upgrade to

that package, you don't break downstream compilations.

I think this is an incredibly bad idea, because while it doesn't break

compiletime, it does break runtime. The downcast will no longer work, since

types from two versions of the same crate are not the same type. This sort of

behavior seems antithetical to Rust; we have a borrow checker for a reason.

Specifically, if your error handling path was important, and needs to be run

whenever that error occurs, this could be extremely costly in terms of data

corruption or lost capital or just time spent trying to debug why the heck your

code stopped working when you changed nothing (other than running cargo update, which may even be done automatically by your CI pipeline). (The

alternative I propose is to simply not do this, and instead just expose the

concrete type directly.)

Strongly typed errors

The3 alternative to Box<dyn Error> is to create a custom enum with

a variant for each error type. For example, you might have variants like

Deserialize(serde_json::Error), Http(reqwest::Error), and maybe an "unknown"

variant4 if exhaustiveness is infeasible. If you're an API consumer, the bar

for "infeasible" is as low as "I don't need to handle this anywhere so I'm not

going to make a variant for it". But, as soon as you do need to handle it, you

need to make a variant for it. If you're not an API consumer, you should aim

to be exhaustive. There are some cases where this is unreasonable, but those

situations are rare and, as such, this exception likely does not apply to you.

With error enums, knowing which errors are possible is now absolutely trivial, all you need to do is look at the variants of the enum. The compiler can also give vastly more helpful diagnostics this way, since it will be able to follow the type system around to ensure that you're handling all cases, and that you're not inventing cases that will never actually happen. No longer do you need to rely on possibly-stale manual documentation or have to read the entire call tree to determine what the failure modes are5.

Another advantage of using enums is allowing multiple errors of the same type to

have different semantics. Maybe you need to load two files (std::io::Error),

but you need to do something different based on which file failed to load. With

enums, you can simply create two variants, one for each behavior. With Box<dyn Error>, this is not possible6.

Now, the downside: you must manually implement std::error::Error for your new

custom error enum. This means Display, Debug, and all the From impls so

? is still ergonomic. Luckily, the thiserror crate provides

a procedural macro that allows you derive all of those traits. When you use the

#[from] attribute to generate From impls, it even correctly implements

std::error::Error::source() for you! This makes acquiring

detailed error messages (e.g. for logging) using nothing but (ostensibly) the

standard library very easy.

Custom error types

There are some rules about creating custom error types that you should follow in order to create the best possible error messages for your users and fellow developers.

-

If your error has an inner error, or your error is caused by another error, you must pick exactly one of the following options for each inner error:

-

Return the inner error when

Error::source()is called.With

thiserror, this means using the#[from]or#[source]attributes. Generally, reach for#[from]first unless it fails to compile, and in that situation, switch to#[source]. Without aFromimpl, you can useResult<T, E>::map_err()to convert the inner error into your custom error type. -

Include the inner error's message as part of your own error's.

With

thiserror, this means using{0}in your#[error("...")], assuming the variant is a tuple variant with the inner error stored in the zeroth tuple item.

This prevents an error message from containing needlessly duplicated information. These options are also listed in the order that you should prefer to do them, the first one being much more common. If you're not sure which to do, just pick number 1.

-

-

The human-readable part of your error message should not include the

:character. What I mean by "human-readable part" is that if, for example, your error message happens to include JSON, then don't worry about it. Just don't use:in#[error("...")]strings, basically.The reason for this is that

:is commonly used to indicate causality on a single line, for example:failed to create user: failed to execute SQL statement: invalid SQL

All three of these would be a separate concrete error type, each of which being wrapped inside an enum variant of the preceding message.

-

Displayimpls forstd::error::Errorimplers should not start with a capital letter, except for special cases like if it begins with an initialism. For example, this looks inconsistent and gross:failed to create user: Failed to execute SQL statement: Invalid SQL

-

Don't use sentence-ending punctuation in error messages. Your error may not be the last one in the chain.

Displaying error messages

Stick the following code into src/error.rs in your project and add thiserror = "1" to your dev dependencies:

#![allow(unused)] fn main() { use std::{ error::Error, fmt::{self, Display, Formatter}, iter, }; /// Wraps any [`Error`][e] type so that [`Display`][d] includes its sources /// /// # Examples /// /// If `Foo` has a source of `Bar`, and `Bar` has a source of `Baz`, then the /// formatted output of `Chain(&Foo)` will look like this: /// /// ``` /// # use crate::error::Chain; // YOU WILL NEED TO CHANGE THIS /// # use thiserror::Error; /// # #[derive(Debug, Error)] /// # #[error("foo")] /// # struct Foo(#[from] Bar); /// # #[derive(Debug, Error)] /// # #[error("bar")] /// # struct Bar(#[from] Baz); /// # #[derive(Debug, Error)] /// # #[error("baz")] /// # struct Baz; /// # fn try_foo() -> Result<(), Foo> { Err(Foo(Bar(Baz))) } /// match try_foo() { /// Ok(foo) => { /// // Do something with foo /// # drop(foo); /// # unreachable!() /// } /// Err(e) => { /// assert_eq!( /// format!("foo error: {}", Chain(&e)), /// "foo error: foo: bar: baz" /// ); /// } /// } /// ``` /// /// [e]: Error /// [d]: Display #[derive(Debug)] pub(crate) struct Chain<'a>(pub &'a dyn Error); impl<'a> Display for Chain<'a> { fn fmt(&self, f: &mut Formatter<'_>) -> fmt::Result { write!(f, "{}", self.0)?; let mut source = self.0.source(); source .into_iter() .chain(iter::from_fn(|| { source = source.and_then(Error::source); source })) .try_for_each(|source| write!(f, ": {}", source)) } } }

This representation is most useful for logging, but could be easily adapted for other uses as well. Once the result of this tracking issue lands in stable, this should be even more ergonomic. Especially if they implement this person's suggestion, which is almost exactly what's written above.

A note about panicking

Panicking is not an error handling strategy, panicking is panicking. You should

only resort to panicking when an illegal state has been reached, or for

convenience when it's not possible to prove that this state will never occur

with the type system. If there is no possible way to recover and further program

execution wouldn't make any sense, is dangerous, or would invoke undefined

behavior, then you can panic. If you have proven out-of-band that this state is

unreachable, then you can panic. This also applies to working with Option,

Result, and other "maybe a value" types: you should only unwrap (aka get the

value you want and panic if it's not there) if the alternative is an illegal

state. I could probably fill another blog post about specific examples of when

to or when not to unwrap maybe types, so for now I'm just going to leave it at

that.

Further reading

- Rust API Guidelines' C-GOOD-ERR

- The docs for

std::error::Error - Guidelines for implementing Display and Error::source for library errors

Footnotes

Henceforth Box<dyn Error>. I'm eliding extra constraints like + Send + Sync + 'static since they're not strictly relevant. Also, you can/should

substitute in its other analogs such as the anyhow crate.

The "API consumer" is the person who will need to handle the error. If you are writing an application, you are an API consumer. If you are writing a library (at least, the public interface), you are not an API consumer. If your codebase is large and you work with other people, you might also want to consider the people you're working with as API consumers when writing new fallible code.

An? I genuinely can't think of a third option.

If you're an API consumer, using an Unknown(Box<dyn Error>) variant

straight up is fine as long as you've taken the rest of this blog post into

consideration. If you're not an API consumer, this is somewhat more

complicated. If your code is the one creating the error and an API consumer is

expected to handle it, you may need either Unknown, Unknown(Box<dyn Error>), or some other such variant with an opaque inner type. If you're

defining the contract for a function (say, with a trait, or something to do

with closures), you should use either T directly (no enum) or an Other(T)

variant (where T is a generic parameter) so that your API consumer can

decide what to do, instead of having their hand be forced.

Like you would with a language with an explicit concept of exceptions that either don't require type annotations and/or cannot be type-annotated (*cough* Python), or poorly written Rust.

Unless you abuse the newtype pattern. But isn't the whole point of using

Box<dyn Error> to not care about errors? Having to create a new type sounds

like you care about errors. Also, as a new Rust user, good luck figuring out

why you need to downcast to two different types that aren't the type you want

to get the type you want.

Considered harmful

A collection of things that I hold to be silly. In these pages, I'll try to convince you that these things should be avoided. See here for the etymology of the title of this section.

Extreme minimalism considered harmful

ORMs considered harmful

"Just write SQL lol" but unironically

I think people reach for ORMs because they are afraid of making mistakes, they do not want to learn SQL, or both. People assume that their ORM of choice will generate correct (i.e. does what they intended), valid (i.e. running it won't produce an error, such as a syntax error), and performant SQL for the queries they need to make, and that they can do this without learning SQL itself. I think wanting to avoid mistakes is a very noble goal, but I do not think ORMs are the right way to acheive it. I also think learning SQL is unavoidable, and reliance on ORMs are a net hinderance for yourself and other maintainers. Allow me to explain why I think these things, and perhaps convince you as well.

ORMs are a performance black box. The query you want to perform may not be perfectly supported by your ORM without some workarounds, leading to suboptimal SQL being generated. Updating your ORM may cause it generate different SQL for the same ORM code. The ORM may not use the most optimal SQL for a given operation, resulting in worse performance. You can improve performance and the consistency thereof by not using an ORM.

ORMs are an extra moving part, which is an extra point of failure. By using an ORM, you trust its test suite (if any) to ensure that it will generate desirable SQL. You also trust that updating the ORM library won't subtly break your program because it changes the SQL it generates under certain conditions. You can easily make your supply chain more secure and your program more reliable by not using an ORM.

ORMs are a waste of developer bandwidth. Instead of being able to read the documentation for the database you're using to learn how to manipulate and query data, you have to do that and read the documentation for your ORM to translate the SQL you want into ORM code... which just turns it back into the SQL you wanted to begin with. Unless of course, there's a bug, or the ORM can't represent the SQL you want, in which cases you'll need to go back to writing SQL directly anyway. Also, in my experience, ORM documentation quality pales absolutely in comparison to database documentation. You can save yourself and other maintainers a lot of reading and frustration by not using an ORM.

ORMs are a waste of human memory. Learning ORMs is an O(N) problem, where N

is the amount of ORMs across all programming languages. Each language has

a handful of ORMs, so multiple projects in the same programming language may be

not even use the same ORM. This raises the bar to contributing quite a bit.

Contrast this with writing SQL directly, which is an O(1) problem across all

programming languages. SQL is the same everywhere, regardless of even

programming language. (And yes, I'm aware there are multiple flavors of SQL, but

that doesn't change this argument at all; ORMs introduce an unnecessary factor

either way.) You can let everyone remember more important things by not using an

ORM.

ORMs are bad at ensuring query validity and correctness. It's entirely possible for an ORM to generate invalid SQL even though the ORM code is correct. ORMs also generally do not validate type information against the database/schema itself, if at all, so typesafety is often lost. ORMs effectively only provide a false sense of security. Statically checked queries (such as those made available by SQLx), solve these problems, and provide actual safety benefits. Essentially, the compiler finds the text of your SQL queries and dry-runs them against a running database (or a description of your schema) to validate their syntactic, semantic, and type-level correctness, both within the query itself and also how the returned data is used by the code. If any of these checks fail, your code fails to compile, and you've successfully avoided making mistakes and avoided the problems posed by ORMs by not using an ORM.

Unfortunately, not every programming language and database library allow for statically checked queries, and that is a major failing on their part. You should either upstream a fix where possible, or jump ship to something that isn't gimped. If you can't do either of those things, I still urge you to not use ORMs. The lives of the maintainers of your software, including yourself, will be improved by not using an ORM.

Using verifiers in tests considered harmful

Don't test X when you care about Y.

A verifier is something that allows the inspection of (parts of) the call stack of a function-under-test, to assert things like "X function was called Y times", "X function was called with Y and Z arguments (and returned W)", and so on. They are generally combined with mocks, but can be used without them.

For example, let's say a test needs to be written for a function that does the

equivalent of mkdir -p foo/bar/baz. Someone using verifiers would first need

to mock the language's standard library's "create directory" function, to avoid

the side effect of actually creating directories. Then, they might assert that

the "create directory" function was called 3 times, perhaps with the expected

arguments.

Later on, it turns out that creating directories is a major bottleneck in the application for whatever reason, and so this logic needs to be rewritten using io_uring. This probably means pulling in a new dependency that provides its own io_uring-aware "create directory" function. Once the refactoring is completed, the test written above is now failing even though it still has the exact same behavior. Unfortunately, the mock and verifier applied in the original test are not aware of this new way of creating directories, and so the test fails because it was reliant on implementation details of the original code.

This situation is both frustrating and avoidable. I don't have any advice for handling the frustration, but I do have advice for avoiding inflicting it: instead of testing that the language's standard library's "create directory" function was called 3 times (mocked or not), one should instead run the function-under-test without mocking away its side effects and then read back the directories on disk and assert that they were created as desired. This way, the exact methodology for creating these side effects is free to change as needed, and the tests will only and always pass as long as the desired observable behavior is maintained.

The important differences between these two ways of writing this test are that the verifiers method:

- becomes a false-negative and a maintenance burden when the implementation changes

- does not guarantee that the function-under-test causes the desired (side) effect

While the other method:

- is resilient to changes in the implementation details

- does guarantee that the function-under-test causes the desired (side) effect

Short-form annoyances

There are a lot of pain-points in various things I interact with that aren't really worthy of a typical length blog post. This gives me a place to write about these issues because, regardless of article length, I believe they're still important.

Python annoyances

Day (date.today() - date(2021, 7, 14)).days of not understanding why Python is

used in production

pathlib

>>> from pathlib import PurePath

>>> PurePath(b"")

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/nix/store/y3inmdhijqkb4qj36yphj4cbllljhqzz-python3-3.9.6/lib/python3.9/pathlib.py", line 665, in __new__

return cls._from_parts(args)

File "/nix/store/y3inmdhijqkb4qj36yphj4cbllljhqzz-python3-3.9.6/lib/python3.9/pathlib.py", line 697, in _from_parts

drv, root, parts = self._parse_args(args)

File "/nix/store/y3inmdhijqkb4qj36yphj4cbllljhqzz-python3-3.9.6/lib/python3.9/pathlib.py", line 686, in _parse_args

raise TypeError(

TypeError: argument should be a str object or an os.PathLike object returning str, not <class 'bytes'>

This is fine because every file system on the planet is UTF-8, clearly.

I heard a counterargument that "it says in the docs that for

'low-level path manipulation on strings, you can also use the os.path

module.'" I take a few issues with this: nowhere in the docs are there explicit

mentions that exceptions will be raised when passing bytes instead of str;

nowhere in the docs are there explicit type annotations suggesting that you can

only use str; and the phrasing of that little warning uses such passive

language that it doesn't seem like there's any real reason to care about this

case in the first place.

I would expect a library designed specifically for dealing with paths to be

able to deal with paths, so I find this behavior to be... surprising.

A counterargument I heard to this is that "pathlib just provides a high level

OOP interface to paths" but I don't understand how that's mutually exclusive

with handling bytes/non-UTF-8.

datetime

I'd like to convert an ISO 8601 timestamp string to the appropriate Python

object. Looks like datetime.fromisoformat is the way to do

that. But wait:

Caution: This does not support parsing arbitrary ISO 8601 strings - it is only intended as the inverse operation of

datetime.isoformat(). A more full-featured ISO 8601 parser,dateutil.parser.isoparseis available in the third-party packagedateutil.

If you're going to add a function for this to the standard library, you'd think

you'd want to avoid half-assing it, no? Since it's built-in, it's way more

likely to be used than any third party package. Anyway, now that I've got my ISO

8601 string (which includes timezone information) converted into a datetime

object, let's compare it against the current time:

(Pdb) p cache_expires_at

datetime.datetime(2022, 4, 20, 21, 41, 52, 955721, tzinfo=datetime.timezone.utc)

(Pdb) p cache_expires_at < datetime.utcnow()

*** TypeError: can't compare offset-naive and offset-aware datetimes

(Pdb) datetime.utcnow()

datetime.datetime(2022, 4, 20, 20, 43, 23, 982491)

... what!? Why does datetime.utcnow() not have timezone information?

Shouldn't it know what timezone the datetime it's creating is in since it

literally has utc in the name? Okay, it looks like the docs actually

address this:

Warning: Because naive

datetimeobjects are treated by manydatetimemethods as local times, it is preferred to use aware datetimes to represent times in UTC. As such, the recommended way to create an object representing the current time in UTC is by callingdatetime.now(timezone.utc).

Well, sort of, anyway. Why even provide this method if it omits timezone

information, then? Why are naive datetimes treated as local time? I bet there

are some horrifying edge cases there. Another big point of pain is that since

naive and aware timestamps are both the same type, tools like pyright can't

even warn about this stuff statically. You need good code coverage (hard, rare)

or manual testing (ew) to be able to detect this sort of error. Similarly,

pydantic can't easily enforce timestamps to be timezone-aware since again,

there's a single type for both cases. It's incredibly silly to allow this sort

of error to even happen when it could so easily be prevented by having two

separate types.

PyPI

For some reason, PyPI allows packages to be uploaded with version requirements

that almost definitely will not work. If I make a package that depends on * or

>1 or such of some other dependency, PyPI will happily accept my upload. The

problem is that, as soon as that dependency releases 2.0, my package is sure

to break. For a real world example of this, see here.

PEP 440 defines Python's own special versioning scheme (instead of just using SemVer like everyone else) with liberal usage of the word "MUST" but then official Python tooling (like PyPI) opt not to enforce it at all. What's even the point, then? Also, what even is a "post release"? Asking Google "post release meaning" gives me a bunch of stuff about prisoners, and appending "software" to the query doesn't help either. After eventually finding the explanation in the PEP, the answer is "it's functionally identical to SemVer Patch releases except we decided to make it a separate thing for no reason".

Since Python decided not to use SemVer, it now also needs to invent its own

syntax for specifying allowable dependency versions. It's a mess, and

quite easy to misuse since nobody knows what ~= means, nor realizes you can use

, to add additional constraints. This could all have been neatly avoided by

adopting SemVer instead. Speaking of ~=, here's a cheap shot:

The spelling of the compatible release clause (

~=) is inspired by the Ruby (~>) and PHP (~) equivalents.

— PEP 440

Ah yes, PHP, the paragon of good design. Smartly,

Poetry lets you just use the standard SemVer syntax for this (^).

Poetry

poetry remove has no --lock option.

Adding dependencies

poetry add can take forever. Trying to add new dependencies is a nightmare,

and that's due to both the aforementioned performance issues and the fact that,

due to the way that Python imports work, it is impossible to have multiple

versions of a single package installed at a time. As a direct result of these

things, I just spent over five minutes trying to install dependencies.

Observe:

$ poetry add --lock --source REDACTED [REDACTED_0..REDACTED_6]

...

Updating dependencies

Resolving dependencies... (40.4s)

...

SolverProblemError

Then after some vim pyproject.toml to comment out things that caused the

SolverProblemError:

$ poetry add --lock --source REDACTED [REDACTED_0..REDACTED_6]

...

Updating dependencies

Resolving dependencies... (115.3s)

...

Writing lock file

Cool, this time it worked, but I'm still not done getting the dependencies I need. So let's add them back:

$ poetry add --lock attrs marshmallow

...

Updating dependencies

Resolving dependencies... (39.0s)

...

SolverProblemError

Okay fine so I need to manually specify an older version of marshmallow

because for some reason poetry just picks the newest one instead of trying to

find the newest compatible one. Let's try again with the version it says is

causing the conflict:

$ poetry add --lock attrs 'marshmallow^2'

...

Updating dependencies

Resolving dependencies... (35.8s)

...

SolverProblemError

Okay so now attrs is having the same problem. Following the same pattern:

$ poetry add --lock 'attrs^19' 'marshmallow^2'

...

Updating dependencies

Resolving dependencies... (106.8s)

...

Writing lock file

Thank fuck, it's finally over. Well, for this project. We have a lot of projects

that need to be converted to poetry. It'll be worth it though because

pip/pip-compile is worse, and poetry2nix is nice.

Just for fun, let's try something similar in a different language:

$ time cargo add rand syn rand_core libc cfg-if quote proc-macro2 unicode-xid serde bitflags

...

... 1.968 total

$ time cargo update # to rebuild the lockfile

...

... 0.704 total

Under 2 seconds. No literally unfixable issues with incompatible transitive dependencies. It Just Works™. Incredible.

Black <22.3.0 incompatible with Click >=8.1

[T]he most recent release of Click, 8.1.0, is breaking Black. This is because Black imports an internal module so Python 3.6 users with misconfigured LANG continues to work mostly properly. The code that patches click was supposed to be resilient to the module disappearing but the code was catching the wrong exception.

I find the quantity of backlinks to this issue to be greatly amusing. (There's probably way more than shown too due to the existence of private repositories.) This is what happpens when hobbyists and the industry take a language seriously even though it lacks:

- A language-enforced concept of item privacy

- The ability to have multiple versions of a package in the dependency tree

- Statically checkable error types

Combinatorial exhaustiveness

Let's see what various typecheckers think about the following code:

from typing import Literal, Tuple, Union

SumType = Union[

Tuple[Literal["foo"], str],

Tuple[Literal["bar"], int],

]

def assert_int(_: int): pass

def assert_str(_: str): pass

def assert_combinatorial_exhaustion(

first: SumType,

second: SumType,

):

match (first, second):

case (("foo", x), ("foo", y)):

assert_str(x)

assert_str(y)

case (("foo", x), ("bar", y)):

assert_str(x)

assert_int(y)

case (("bar", x), ("foo", y)):

assert_int(x)

assert_str(y)

case (("bar", x), ("bar", y)):

assert_int(x)

assert_int(y)

Pytype

I couldn't get this to run on NixOS, so I don't know.

Rating: ?/10

Pyre

I couldn't get this to run on NixOS either, but they do have a web based version for some reason. Here's what it says:

21:23: Incompatible parameter type [6]: In call `assert_str`, for 1st positional only parameter expected `str` but got `Union[int, str]`.

22:23: Incompatible parameter type [6]: In call `assert_str`, for 1st positional only parameter expected `str` but got `Union[int, str]`.

24:23: Incompatible parameter type [6]: In call `assert_str`, for 1st positional only parameter expected `str` but got `Union[int, str]`.

25:23: Incompatible parameter type [6]: In call `assert_int`, for 1st positional only parameter expected `int` but got `Union[int, str]`.

27:23: Incompatible parameter type [6]: In call `assert_int`, for 1st positional only parameter expected `int` but got `Union[int, str]`.

28:23: Incompatible parameter type [6]: In call `assert_str`, for 1st positional only parameter expected `str` but got `Union[int, str]`.

30:23: Incompatible parameter type [6]: In call `assert_int`, for 1st positional only parameter expected `int` but got `Union[int, str]`.

31:23: Incompatible parameter type [6]: In call `assert_int`, for 1st positional only parameter expected `int` but got `Union[int, str]`.

pyre is clearly unable to do type narrowing in the match arms. There are no

warnings about exhaustiveness, however; is that working properly? Let's pass it

the simplest possible code to test for that: